Table of contents

- NoSQL Database: Breaking the Relational Mold

- Introduction to Amazon DynamoDB

- Getting Started with Amazon DynamoDB

- Creating DynamoDB Table and Items

- SCAN & QUERY Operations in DDB Table

- Core Components of DynamoDB Table

- Global & Local Secondary Indexes in DDB

- DynamoDB Point in Time Recovery (PITR)

- Monitoring and Logging in DDB Table

- Global Tables in Amazon DynamoDB

- Exports and Streams in DDB Table

- Additional Settings in DynamoDB Table

- Real-World Examples of DynamoDB

- References

- Conclusion

In a world of ever-growing data, your applications need a storage solution that bends, not breaks. Enter DynamoDB, the blazing-fast, NoSQL database from Amazon Web Services. Imagine a database that scales infinitely, handles any data structure with ease, and lets you focus on building amazing apps instead of managing servers. That's the DynamoDB magic, a dynamic, flexible companion for your high-performance, cloud-native adventures.

Buckle up, because we're diving deep into the DynamoDB universe, exploring its superpowers and unlocking its potential to revolutionize your data storage game!

NoSQL Database: Breaking the Relational Mold

In the realm of data storage, NoSQL databases have emerged as a powerful alternative to traditional relational databases. They offer distinct advantages for applications demanding flexibility, scalability, and high performance in handling massive, unstructured, or rapidly evolving data.

NoSQL databases store information in JSON documents instead of columns and rows used by relational databases. To be clear, NoSQL stands for "not only SQL" rather than "no SQL" at all. Consequently, NoSQL databases are built to be flexible, scalable, and capable of rapidly responding to the data management demands of modern businesses. NoSQL databases are widely used in real-time web applications and big data because their main advantages are high scalability and high availability.

Think of it like this:

Relational Databases: Like a meticulously organized library, each book is classified into specific categories (tables), has defined chapters (columns), and every page (row) holds neatly formatted text.

NoSQL Databases: Like a treasure chest filled with diverse items, each labeled with a unique tag (key) and containing various objects, documents, or even pictures – all organized in a way that makes sense for the specific purpose.

Introduction to Amazon DynamoDB

Amazon DynamoDB is a fully managed NoSQL database service offered by AWS. It is a key-value store that allows users to store, retrieve, and manage large amounts of structured and unstructured data. It is designed for the seamless development and scaling of applications, offering high-performance and low-latency access to data.

DynamoDB is particularly known for its ability to handle massive workloads and provide consistent, fast performance across various use cases like gaming, mobile and web applications, and Internet of Things (IoT) devices as it provides a flexible schema, meaning that users can change their data structure at any time without having to modify their database, making it an ideal choice for modern applications that require cost-effective and scalable solutions.

Getting Started with Amazon DynamoDB

In DynamoDB, tables, items, and attributes are the core components that you work with. A table is a collection of items, and each item is a collection of attributes. DynamoDB uses a primary key to uniquely identify each item in a table and doesn’t require a schema to create a table, and secondary indexes provide more querying flexibility.

Primary Key

A primary key is a unique attribute that is necessary while creating a table, it cannot be null at any given point. Hence, while inserting an item into the table, a primary key attribute is a must. E.g. PersonID is the primary key for the table PersonRecords. Two items cannot have a similar primary key.

DynamoDB supports two types of Primary keys.

Simple Primary Key

A simple primary key is also known as a Partition key, this is a single attribute. DynamoDB uses the Partition key’s value to distinguish items in a table.

E.g. PersonID in the PersonRecords table.

Composite Primary Key

A composite primary key is also known as a Partition key and a Sort key. This type of key is generally made up of two items. The primary component is the Partition key and the secondary component is the Sort key.

E.g. PersonDetails table with PersonID (primary key) and PersonContactNumber (sort key) as a composite primary key.

Let's check an example of what a simple primary key looks like -

// Below is a "PersonRecords" table with primary key as "PersonID" (unique identifier).

// first person

{

"PersonID": 101,

"FirstName": "Fred",

"LastName": "Smith",

"Phone": "555-4321"

}

// second person

{

"PersonID": 102,

"FirstName": "Mary",

"LastName": "Jones",

"Address": {

"Street": "123 Main",

"City": "Anytown",

"State": "OH",

"ZIPCode": 12345

}

}

// third person

{

"PersonID": 103,

"FirstName": "Howard",

"LastName": "Stephens",

"Address": {

"Street": "123 Main",

"City": "London",

"PostalCode": "ER3 5K8"

},

"FavoriteColor": "Blue"

}

Creating DynamoDB Table and Items

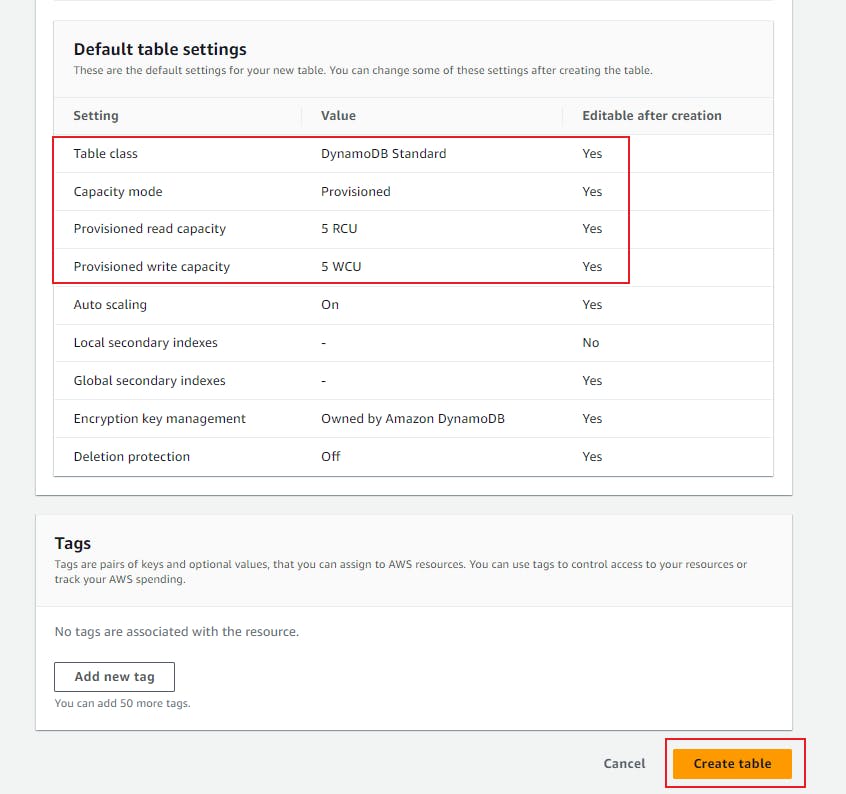

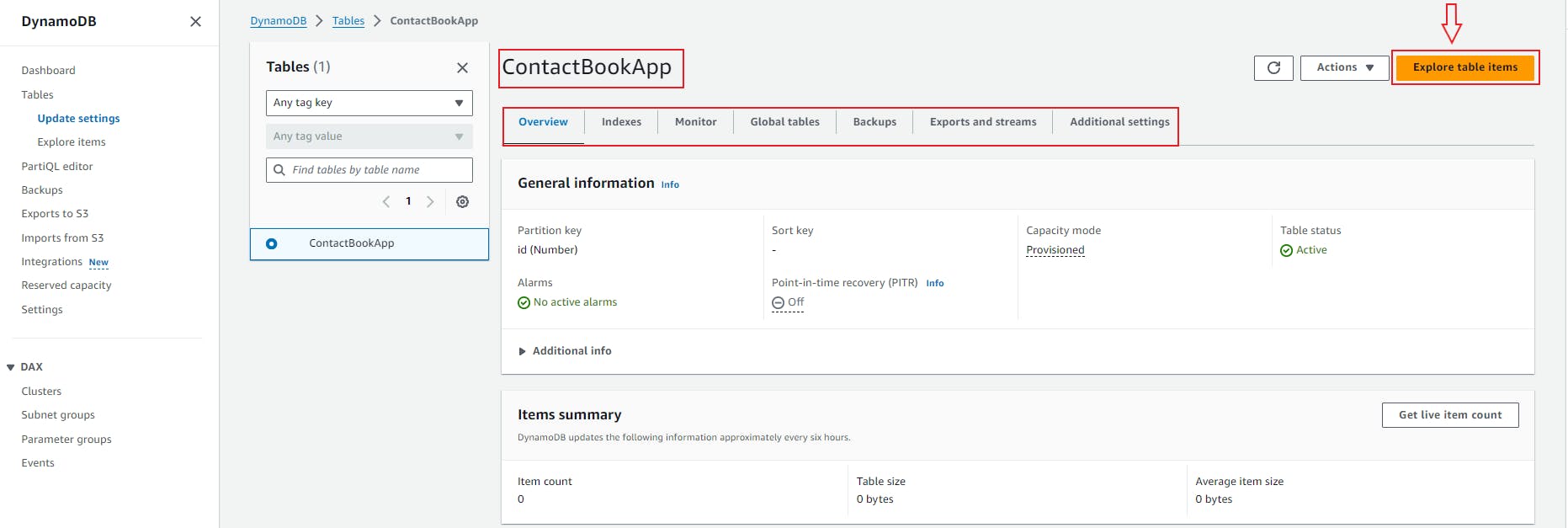

Let's create a DynamoDB table with default settings and later explore table items to create the items in the respective table.

I have created some of the items beforehand, let's create one item here to get to know how an item is created from the console, later in my other blog, we will see how to do the CRUD operations using Python boto3 SDK.

Here, we have now created a complete table with all the items associated with it.

SCAN & QUERY Operations in DDB Table

Scan Operation

The Scan operation in DynamoDB allows you to retrieve all the items in a table. It examines every item in the specified table and returns the items that match the filter conditions. However, it's important to note that Scan can be inefficient and costly for large tables since it reads every item in the table, making it less performant compared to Query. The filter expression is applied after the data is read, meaning that it fetches all the data and then applies the filter.

It fetches all the table data first and then applies the filter that we have provided which makes it inefficient as compared to Query operation while working with large tables, where it can take some time to filter the data and provide desired output.

Query Operation

On the other hand, the Query operation is designed for more efficient item retrieval based on the primary key. It allows you to retrieve data from a table based on a specific partition key value and an optional sort key value.

Unlike Scan, which examines every item, Query is more targeted, making it highly efficient for retrieving a specific set of items. It's particularly useful when you want to fetch items with a specific partition key or a range of sort key values. The filtering is applied during the read operation, making it more efficient for larger datasets.

Understanding these differences is crucial for optimizing the performance and cost-effectiveness of DynamoDB queries in your applications.

Core Components of DynamoDB Table

Let's have a high-level overview of the actions/components available per table within each navigation tab, and later we will go through each of them in a bit of detail.

Overview

Description: View table details, including item count and metrics.

Use Case: Useful for quickly understanding the general health and size of the table.

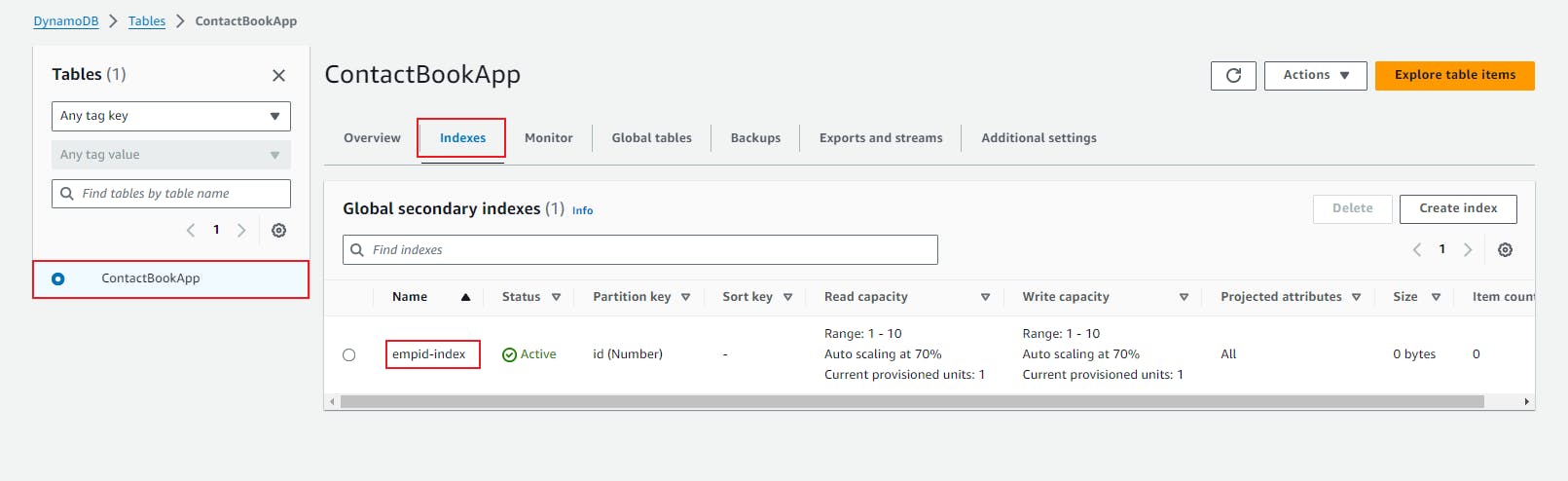

Indexes

Description: Here, you can manage both global and local secondary indexes associated with the table. Global Secondary Indexes (GSI) allow for querying across the entire table, while Local Secondary Indexes (LSI) enable querying within a partition.

Use Case: Essential for optimizing query performance based on different access patterns.

Monitor

Description: This section provides insights into the table's performance through CloudWatch metrics and alarms. It also includes CloudWatch Contributor Insights, which helps identify the most frequently accessed items in the table.

Use Case: Monitoring and optimizing the performance of your DynamoDB table.

Global Tables

Description: Manage table replicas across different AWS regions to achieve high availability and fault tolerance. DynamoDB Global Tables enable automatic multi-region replication.

Use Case: Useful for applications requiring global distribution and redundancy.

Backups

Description: This section allows you to manage backups for the table. You can set up point-in-time recovery (PITR) for continuous backups or perform on-demand backups.

Use Case: Ensures data durability and provides recovery options in case of accidental deletions or corruptions.

Exports and streams

Description: Manage DynamoDB Streams and Kinesis Data Streams associated with the table. Additionally, export your table data to Amazon S3 for further analysis or archival.

Use Case: Supports real-time data processing and data archiving.

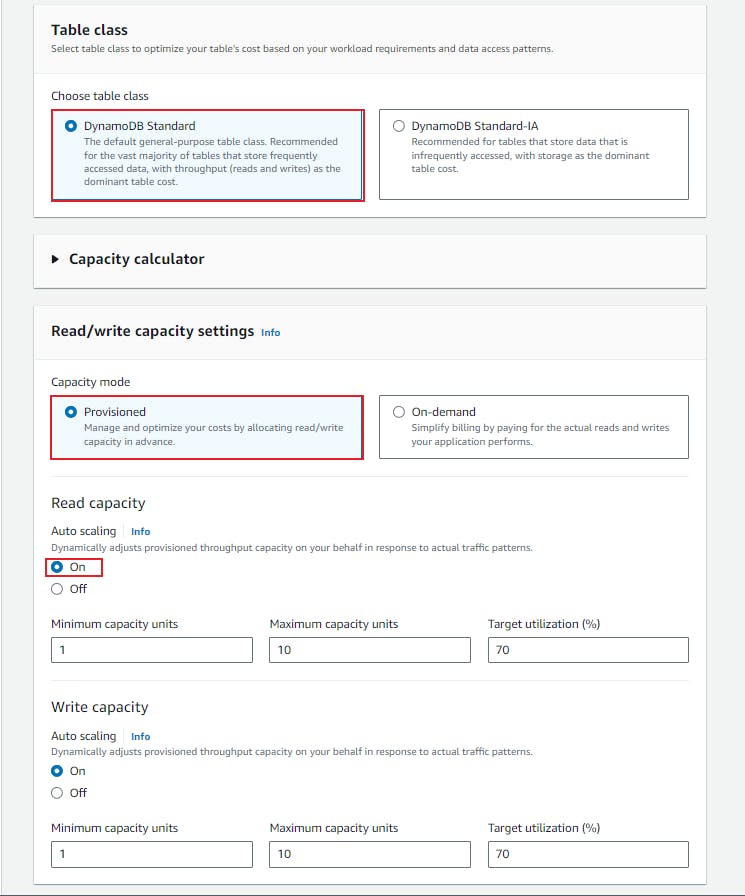

Additional settings

Description: Configure various table settings, including read/write capacity provisioning, Time to Live (TTL) settings for automatic data expiration, encryption at rest, and tagging for better resource organization.

Use Case: Fine-tune table behavior based on application requirements and security considerations.

Understanding and appropriately configuring these components is crucial for optimizing the performance, durability, and functionality of DynamoDB tables per the specific needs of your application.

Global & Local Secondary Indexes in DDB

In DynamoDB, navigating your data efficiently can sometimes require tools beyond the primary key. That's where secondary indexes come in, A secondary index is a data structure that contains a subset of attributes from a table, along with an alternate key to support Query operations.

DynamoDB supports two types of secondary indexes:

Global Secondary Indexes (GSI)

Local Secondary Indexes (LSI)

Understanding their strengths and how to utilize them effectively can unlock the full potential of your DynamoDB tables.

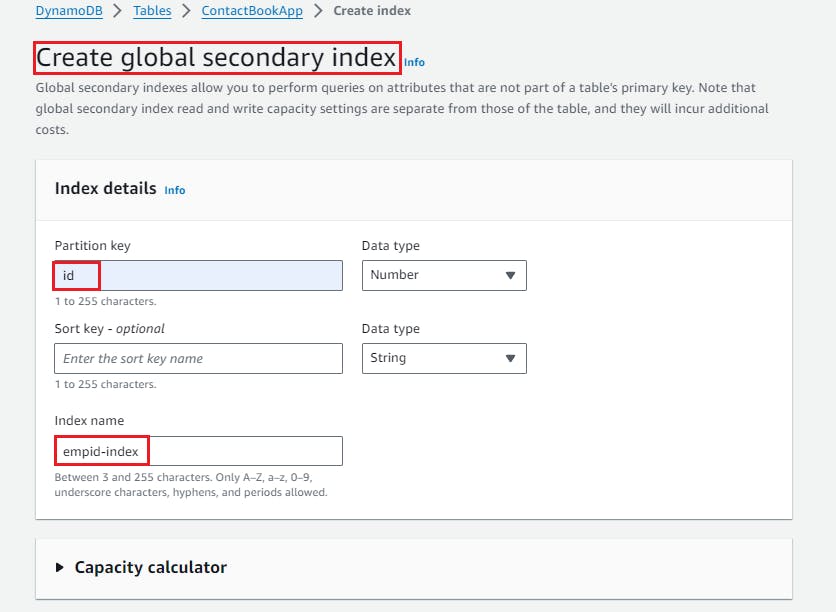

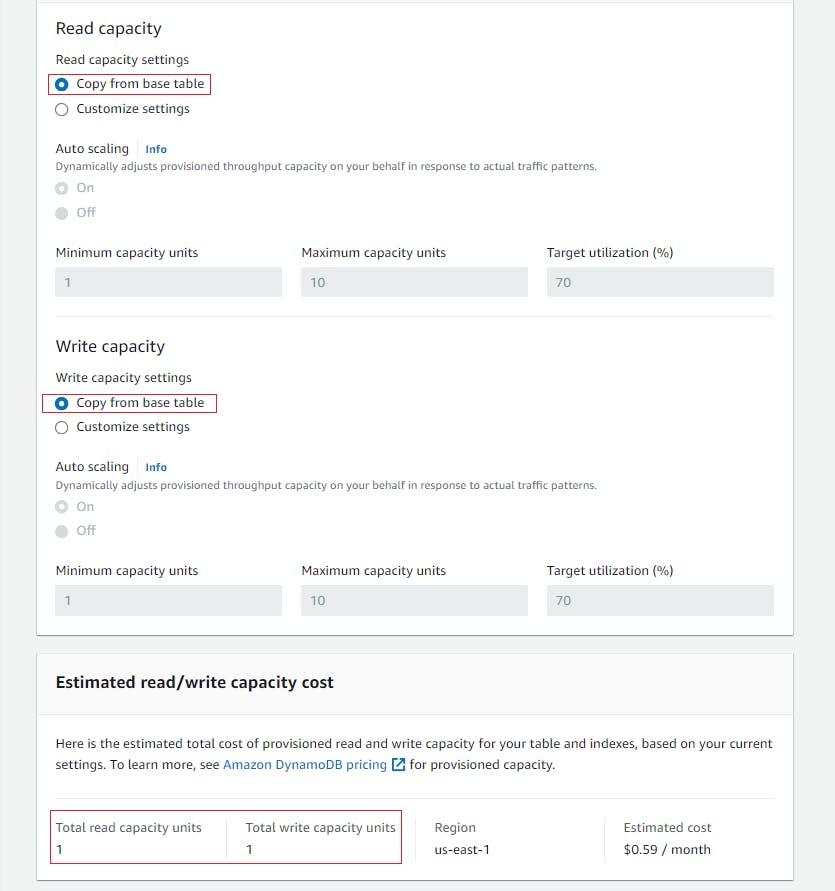

Global Secondary Indexes (GSI)

A Global Secondary Index is an index with a partition key and a sort key that can be different from those on the table. GSIs provide flexibility by enabling queries on non-key attributes. They are particularly useful when your application needs to support diverse query patterns. GSIs are global, meaning they span all the data in a table, and they allow for fast and efficient lookups across all items.

To use a GSI, you define it at the time of table creation or add it later. Each GSI comes with its own read and write capacity, allowing you to scale your queries independently.

Let's create a Global Secondary Index (GSI) and later query the item using it.

Global Secondary Index (GSI) has been created successfully.

Now let's filter the item based on the partition key and the project value.

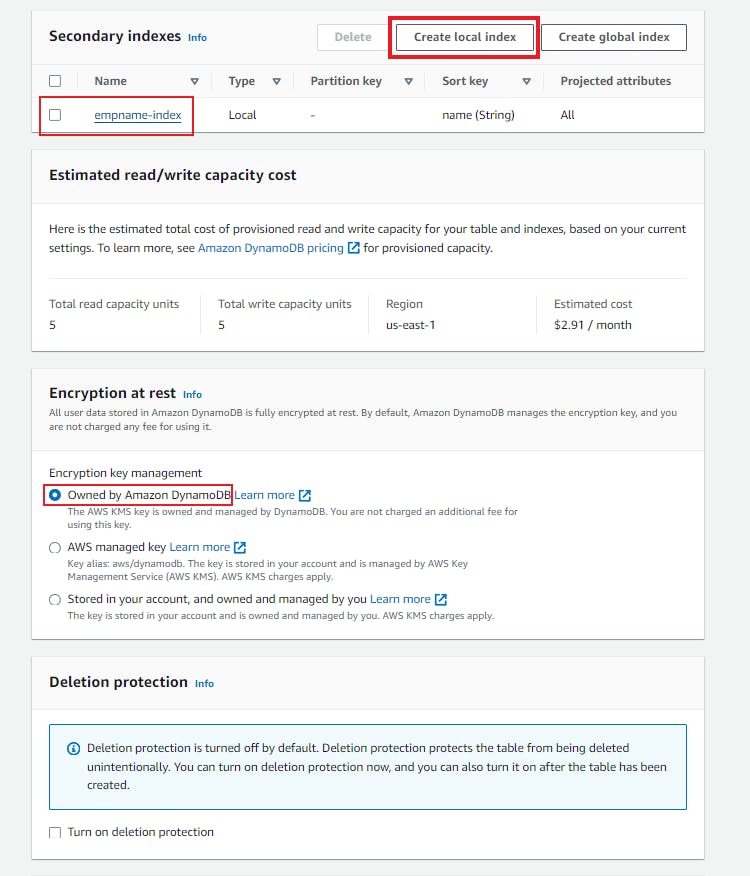

Local Secondary Indexes (LSI)

A Local Secondary Index (LSI) is similar to a GSI, but it must have the same partition key as the table and a different sort key. Unlike GSIs, LSIs are local to a particular partition and are an excellent choice when you need to query a range of items within a single partition key value. They share the read and write capacity of the table, making them more cost-effective.

LSIs are defined when creating the table and cannot be added later, so careful consideration is required during the initial table design.

Let's create a Local Secondary Index (LSI) and later query the item using it.

For this, we need to create a new table as we cannot create an LSI in an existing table where we haven't created a Local Secondary Index (LSI) beforehand while creating a table.

Note - While creating a new table, we are adding the sort key in addition to the partition key so that we can create an item with the same id (partition key) but there should be a different sort key to make every item unique.

Here, in the Secondary Indexes section, we are choosing the Local Index option to create the Local Secondary Indexes (LSI).

Local Secondary Indexes (LSI) have been created successfully.

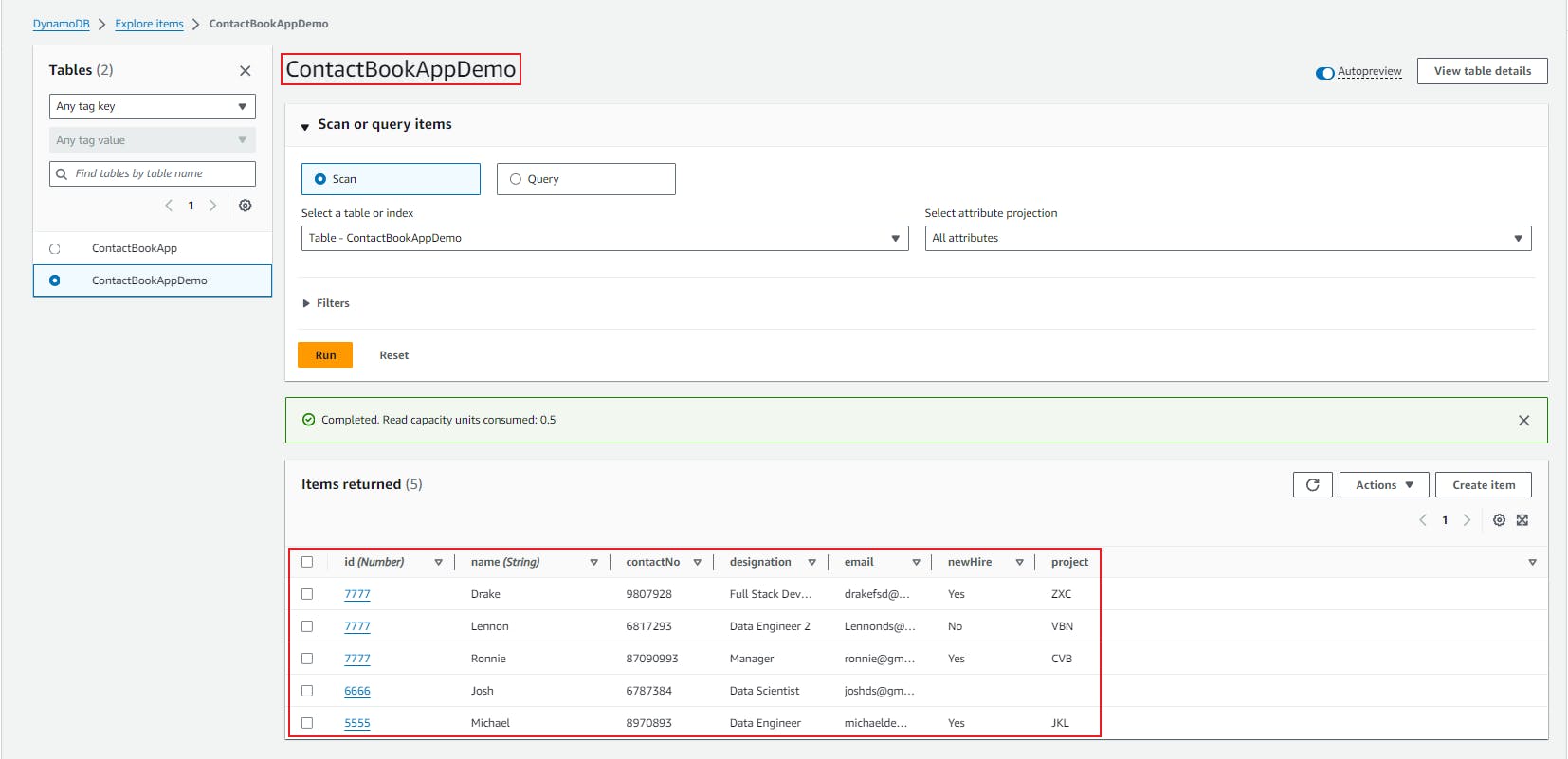

I have created some of the items which can be seen in the below screenshot beforehand, so that we can directly navigate to query the items.

Now let's filter the item based on the partition key and sort key and we can apply some extra filters as well.

When to use GSI vs LSI

Use Global Secondary Indexes (GSI) when your application requires diverse query patterns, and you need to efficiently query across all items in the table.

Use Local Secondary Indexes (LSI) when your queries are limited to a specific partition key value, and you want a more cost-effective solution that shares capacity with the table.

DynamoDB Point in Time Recovery (PITR)

Point-in-time recovery helps protect your DynamoDB tables from accidental write or delete operations. With point-in-time recovery, you don't have to worry about creating, maintaining, or scheduling on-demand backups.

For example, suppose that a test script writes accidentally to a production DynamoDB table. With point-in-time recovery, you can restore that table to any point in time during the last 35 days, with granularity down to a fraction of a second. DynamoDB maintains incremental backups of your table.

Key Features of DynamoDB PITR

Continuous Backups:

- It automatically backs up your table data continuously, capturing changes to your data in real-time.

Granular Restore:

- You can restore your table to any specific point in time within the last 35 days, allowing granular control over data recovery.

Point-in-Time Queries:

- It enables you to perform point-in-time queries on your table, allowing you to retrieve historical data.

Data Loss Prevention:

- It helps prevent data loss due to accidental deletes or updates by providing a way to revert to a previous state.

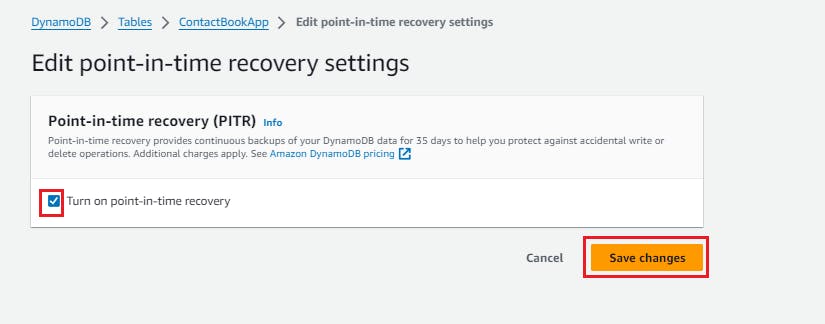

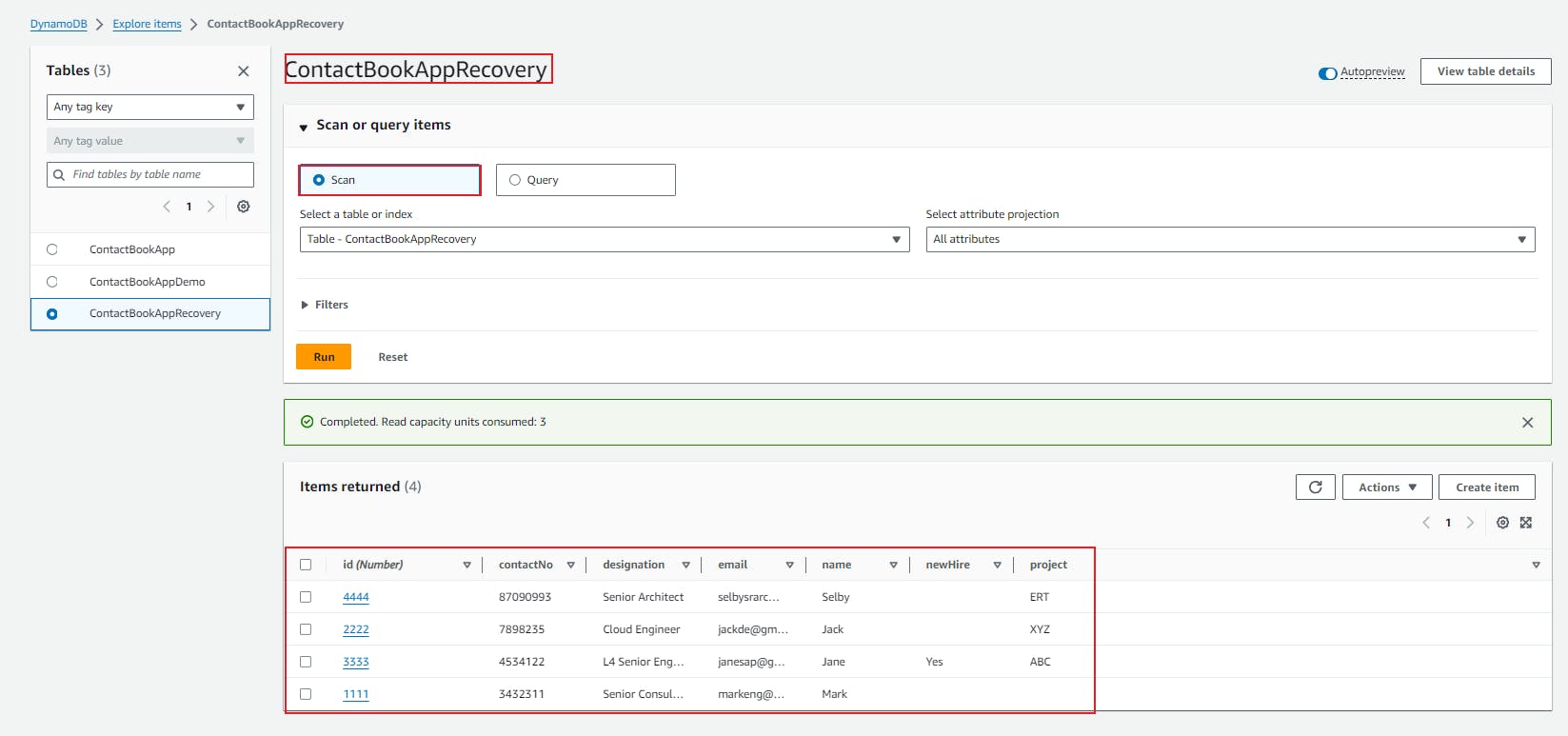

Let's look at how to enable the PITR on the existing DynamoDB table.

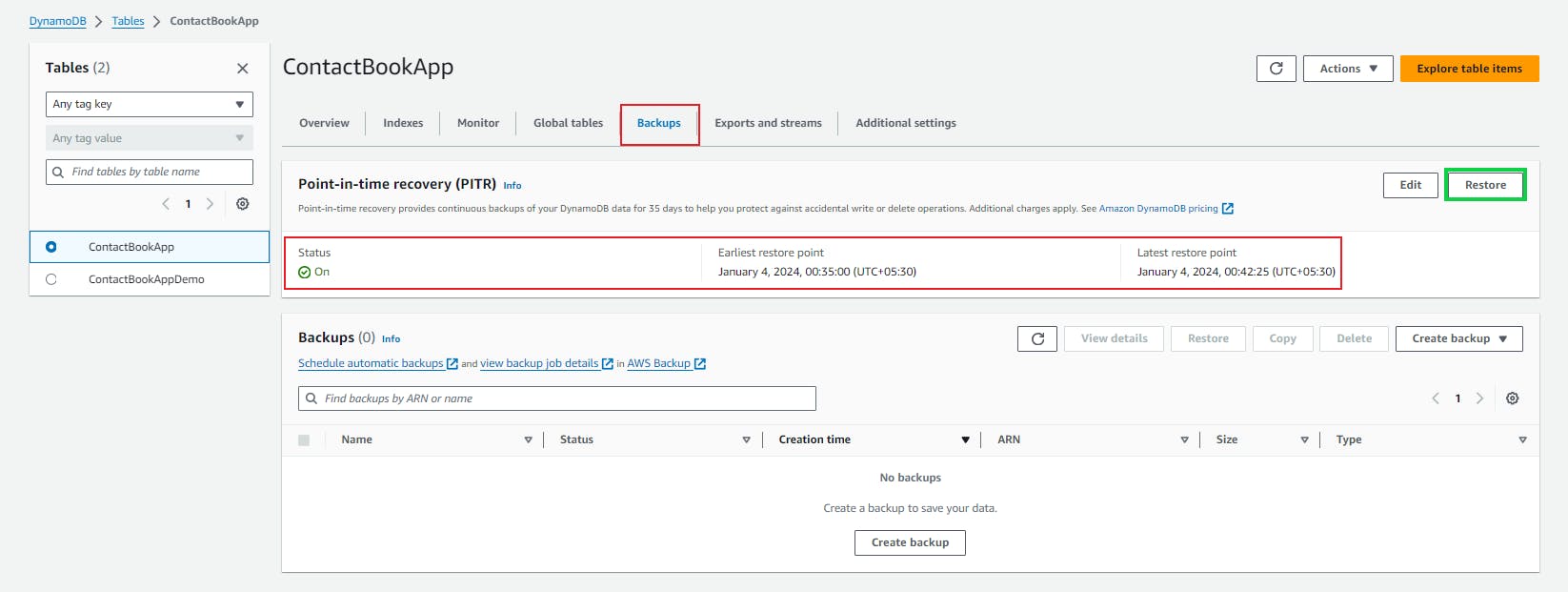

As we can see PITR is enabled successfully, let's now restore the table data.

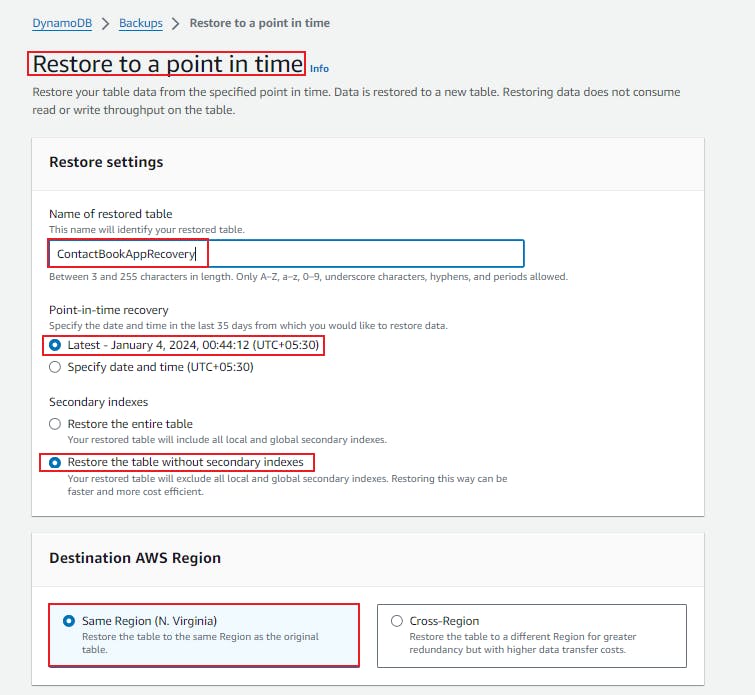

To restore the table data from a specified point in time, data is restored to a new DynamoDB table.

Let's restore the table below and check what options we need to configure.

Below, we can see the new DynamoDB table where data is automatically synced up from the existing table, and we can see all the items associated with the existing table for which we have enabled the PITR and created the restore point.

By leveraging DynamoDB PITR, you can ensure the durability and recoverability of your table data, providing peace of mind in the event of data incidents or operational errors.

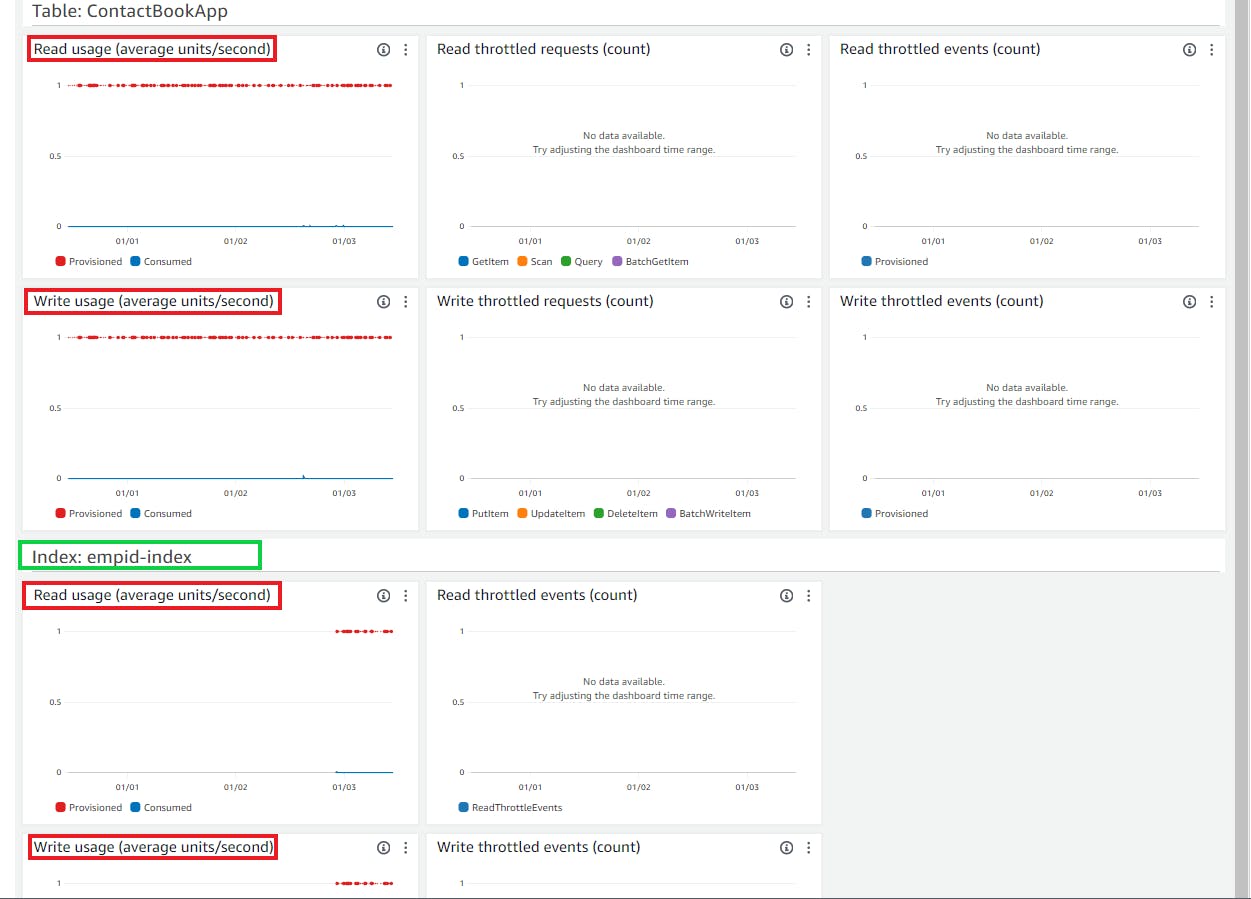

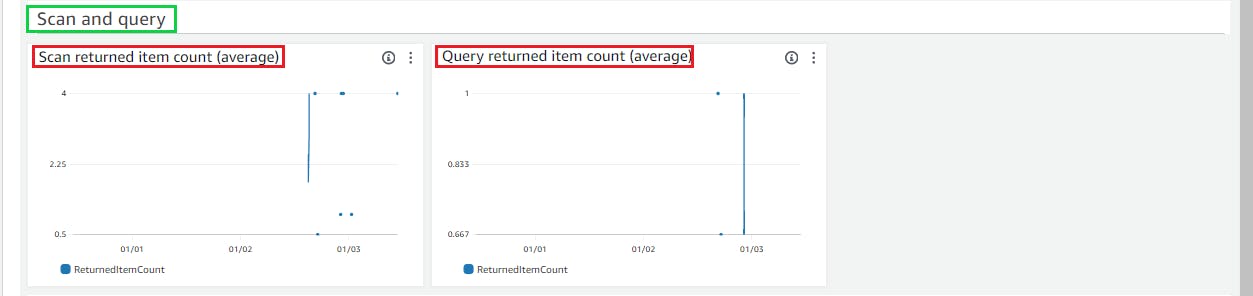

Monitoring and Logging in DDB Table

Monitoring and logging are integral aspects of managing a DynamoDB, offering insights into performance, identifying potential issues, and ensuring optimal operation.

DynamoDB provides a suite of tools for effective monitoring and logging.

1. CloudWatch Metrics:

DynamoDB seamlessly integrates with Amazon CloudWatch, delivering a comprehensive set of metrics. These metrics cover various dimensions, including read and write capacity utilization, error rates, and system-level metrics like consumed capacity. Monitoring these metrics allows users to track the health and efficiency of their DynamoDB tables.

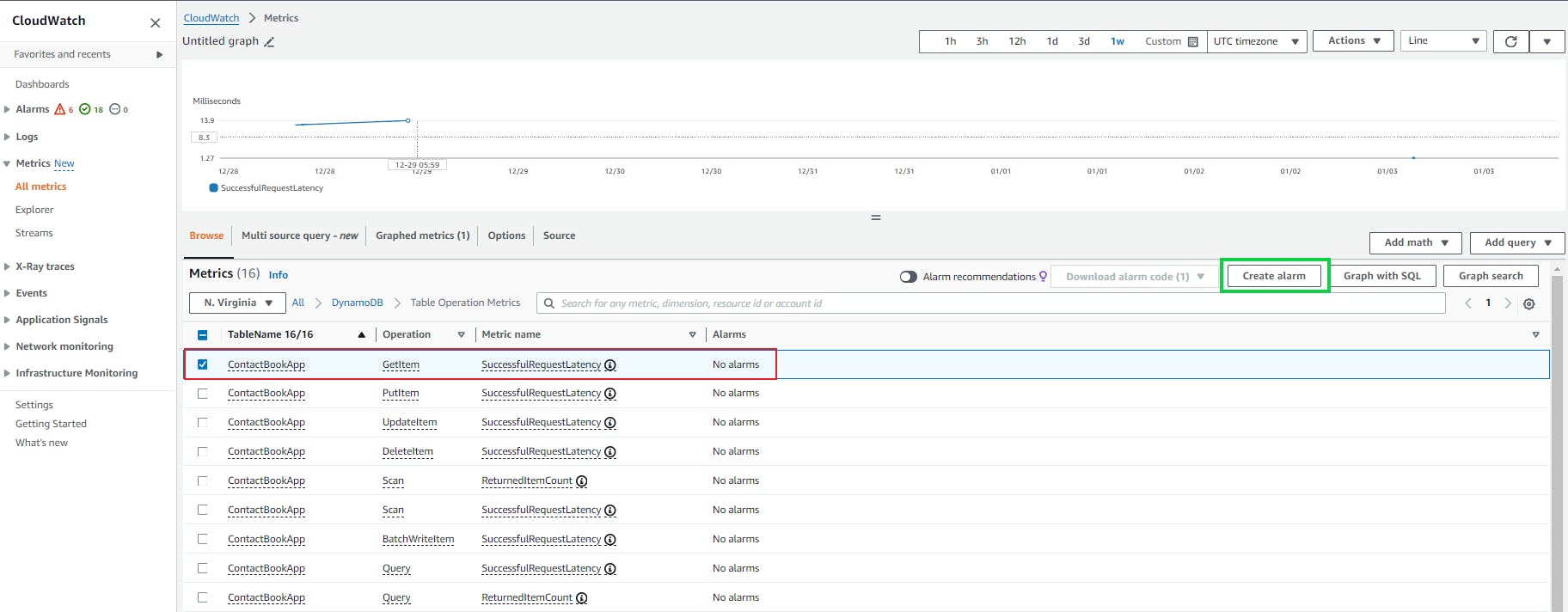

2. Alarms and Notifications:

CloudWatch Alarms enable proactive monitoring by allowing users to set thresholds for different metrics. When a threshold is breached, alarms trigger notifications, enabling quick responses to issues such as capacity overuse or increased error rates. This ensures that potential problems are addressed promptly.

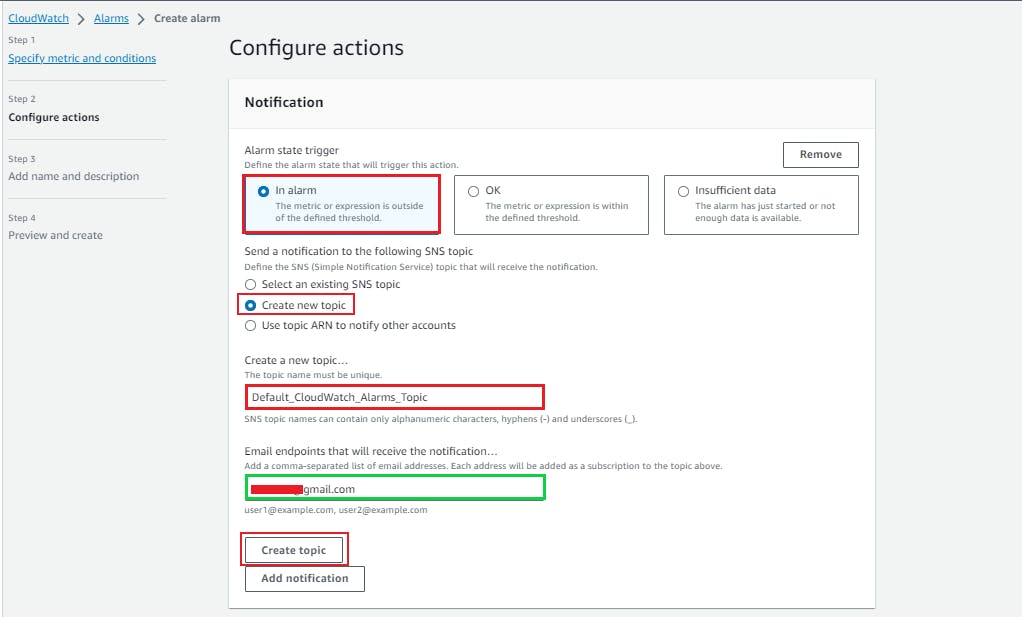

In the Cloudwatch console, Navigate to the All metrics section search for the below highlighted one, and click on Create Alarm to configure the alarm on the required operation, here we are doing it for scan operation as seen in the below screenshot.

Alarm creation starts from here, where we need to specify the metrics and conditions like threshold value, and SNS topic to get notified when an alarm breaches the threshold value.

Here, we are mentioning the notification details, and need to provide the topic name and the email address where we want to get notified.

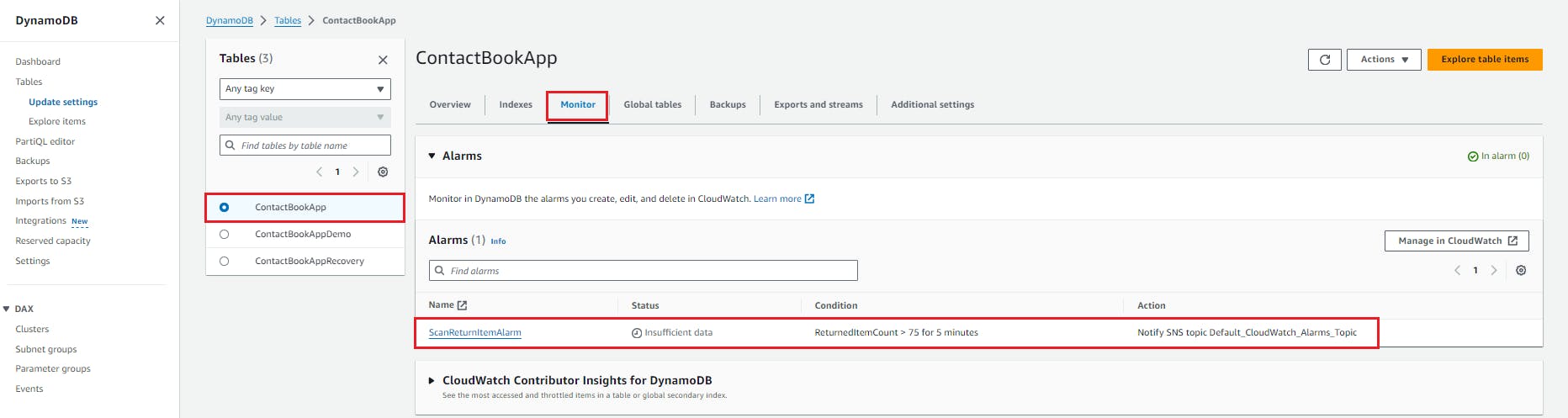

Review and create the alarm, and we will see this alarm created in the Cloudwatch alarms dashboard, also it will get associated under the Monitor section in the DynamoDB table.

we can see the alarm has been associated with the DynamoDB table.

Global Tables in Amazon DynamoDB

DynamoDB Global Tables is a robust and fully managed feature that extends the global distribution capabilities of DynamoDB, allowing users to create multi-region, multi-master database tables, and delivers fast and localized read and write performance for massively scaled global applications without having to build and maintain your replication solution.

This feature is designed to provide a globally distributed, highly available, and low-latency database solution for applications with a geographically dispersed user base.

You can specify the AWS Regions where you want the tables to be available and DynamoDB will propagate ongoing data changes to all of them.

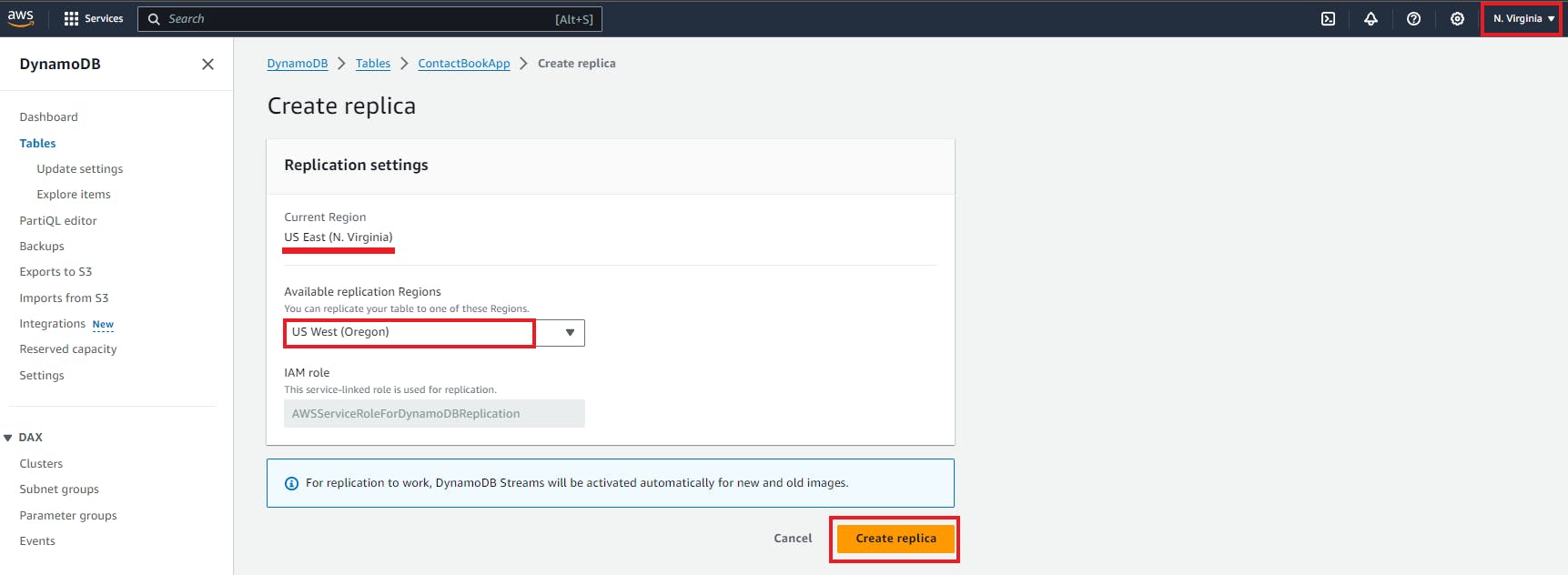

Creating Global Table (Replica)

To create the Global Tables, we need to create Replica from the source region first.

Here, we will get the option to choose the replication region (target region) and at a later point in time, when the replication is active, the DynamoDB table will get replicated to the target region.

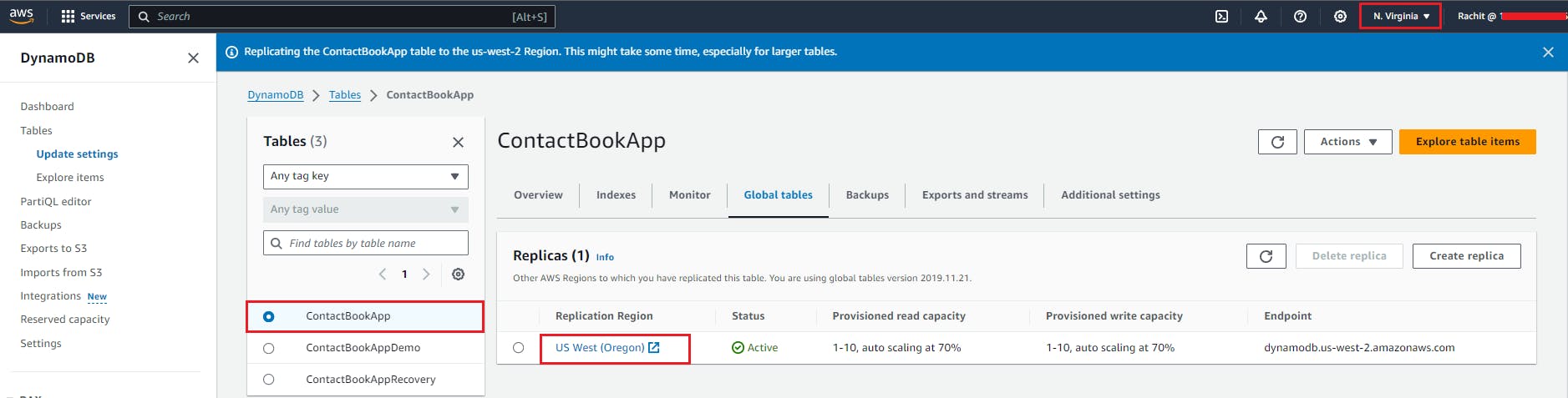

Replica has been created successfully and it will take some time to come to an active state.

As Replica is created successfully, we can check the target region, it's Oregon in our case, and we can see that table has been replicated here.

Benefits of using DDB Global Tables

Replicating your DynamoDB tables automatically across your choice of AWS Regions

Eliminating the difficult work of replicating data between Regions and resolving update conflicts, so you can focus on your application's business logic.

Helping your applications stay highly available even in the unlikely event of isolation or degradation of an entire Region.

Exports and Streams in DDB Table

Exports

Imagine needing to transfer your DynamoDB table data elsewhere, like for backup purposes, analytics, or migrating to another database. DynamoDB Exports come to the rescue.

They allow you to -

Export full table data or incremental updates: Choose to export all items or just the changes since your last export.

Specify export destination: Select Amazon S3 buckets, AWS Lambda functions, or even other DynamoDB tables as your destination.

Control data format: Decide between DynamoDB JSON format or a more versatile format like Apache Parquet.

Schedule exports: Set up automatic exports to run periodically or trigger them manually as needed.

Key Benefits of Exports

Backup and Disaster Recovery: Export your data for safekeeping and ensure easy restoration in case of disruptions.

Analytics and Reporting: Export data to other services like Amazon Redshift or Amazon Athena for in-depth analysis and reporting.

Migration and Data Sharing: Export data for seamless migration to other databases or sharing with collaborators.

Streams

Think of Streams as a real-time feed of changes happening in your DynamoDB table. They offer various benefits -

Continuous data change tracking: Capture every item creation, update, or deletion as it occurs.

Near-real-time delivery: Receive change records within milliseconds of the actual data modification.

Trigger other AWS services: Use Streams to trigger Lambda functions, invoke Step Functions workflows, or send notifications to other services.

Flexible processing options: Choose from different processing options like direct delivery to Lambda, sending to Kinesis Data Streams for further analysis, or storing in the DynamoDB Streams archive for later retrieval.

Key Benefits of Streams

Build event-driven applications: React to data changes in real time and trigger actions like automatic notifications, data processing pipelines, or other workflows.

Enhanced data analytics: Analyze data changes for insights into user behavior, trends, and application performance.

Improved operational efficiency: Automate tasks like data backup, archiving, or synchronization based on real-time data changes.

Choosing Between Exports and Streams

Exports are ideal for periodic data transfers or large-scale backups, while Streams shine when you need real-time change notifications and event-driven processing. You can even use them together, exporting data periodically for long-term storage while utilizing Streams for real-time processing and triggering actions.

By understanding and leveraging DynamoDB Exports and Streams, you can unlock a world of possibilities for data movement, analysis, and event-driven applications, enhancing the flexibility and efficiency of your data management strategies.

Additional Settings in DynamoDB Table

DynamoDB offers a range of additional settings that empower users to fine-tune the behavior of their tables and enhance overall management.

Read/Write Capacity

DynamoDB enables users to provision read and write capacity units to control the throughput of their tables. This provides flexibility in handling varying workloads, ensuring optimal performance during peak times while minimizing costs during periods of lower demand.

Table Capacity

Table capacity settings in DynamoDB allow users to view and manage their table's capacity utilization. This provides insights into how effectively provisioned throughput is utilized, aiding in capacity planning and ensuring efficient resource allocation.

Autoscaling Activities

DynamoDB supports autoscaling, allowing tables to automatically adjust their provisioned capacity based on actual demand. Autoscaling activities can be monitored and configured to ensure that the table dynamically scales up or down in response to changing workloads, providing cost savings and maintaining performance.

Time to Live (TTL)

DynamoDB's Time to Live (TTL) feature allows users to define a timestamp attribute for items in a table. Items with an expired TTL attribute are automatically deleted after a specified period, reducing storage costs and managing data retention which reduces the need for manual cleanup.

Encryption

DynamoDB provides encryption at rest to enhance data security. Users can enable encryption when creating a table, ensuring that data stored on disk is protected. This feature utilizes AWS Key Management Service (KMS) for robust encryption key management.

Deletion Protection

DynamoDB supports deletion protection at the table level, which safeguards your tables against unintentional deletion of critical data. This feature when enabled, ensures an additional layer of safety, particularly for critical tables, by requiring explicit removal of deletion protection before a table can be deleted.

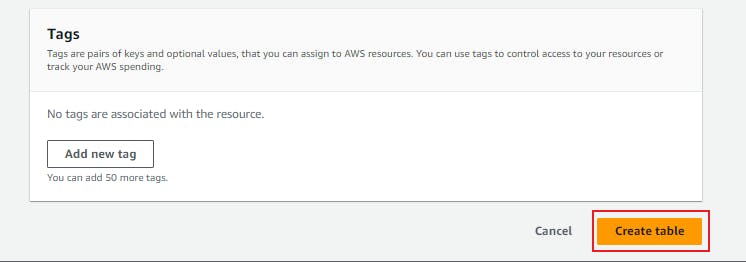

Tags

Tags in DynamoDB allow users to categorize and label their tables for organizational and cost-tracking purposes. Tags can be assigned to tables, providing metadata that simplifies resource identification and management. This is especially valuable in environments with numerous DynamoDB tables.

In summary, these additional settings in DynamoDB afford users the tools to optimize table performance, automate capacity adjustments, manage data expiration through TTL, enhance security with encryption, safeguard critical tables with deletion protection, and organize resources efficiently using tags.

DynamoDB Accelerator (DAX): A Turbo Boost for DynamoDB

DynamoDB Accelerator, or DAX, serves as a high-performance caching layer for DynamoDB, enhancing the speed and efficiency of read-intensive workloads. Designed to seamlessly integrate with DynamoDB, DAX operates as an in-memory cache, reducing the response times for queries and easing the load on the underlying DynamoDB tables.

Think of it as a high-speed buffer, readily storing frequently accessed data to avoid hitting the main DynamoDB table every time, resulting in 10x faster read speeds, with response times plummeting from milliseconds to microseconds.

DAX is particularly beneficial for read-heavy applications, such as those involving real-time analytics, gaming leaderboards, and interactive dashboards. Its automatic handling of item updates and expirations ensures that cached data stays fresh and relevant.

Why use DynamoDB Accelerator (DAX)

Here are some of the key benefits of using DynamoDB Accelerator (DAX)

Unmatched Read Performance: DAX is a champion for read-heavy workloads, ideal for applications like social media feeds, e-commerce product listings, or real-time gaming leaderboards.

Reduced DynamoDB Costs: By offloading frequent reads from DynamoDB, you can potentially optimize your table capacity and save on costs.

Improved User Experience: DAX keeps your users happy with seamless, lightning-fast responses to their queries.

Simplified Development: No need to rewrite queries or modify applications to leverage DAX – it seamlessly integrates with existing DynamoDB setups.

When to use DynamoDB Accelerator (DAX)

Here are some of the key points on when to use DynamoDB Accelerator (DAX)

Frequent reads of the same data: If your application constantly retrieves the same information, DAX can significantly improve response times.

High user traffic with spikes: DAX can handle sudden bursts of read traffic while keeping your DynamoDB table stable.

Read-heavy applications: If your application prioritizes fast reads over writes, DAX is a perfect fit.

Real-World Examples of DynamoDB

Let's look at some real-world examples that demonstrate DynamoDB's versatility and ability to power a wide range of real-world applications that demand scalability, performance, and flexibility.

Social Media Apps

Picture a bustling social network: Millions of users share posts, photos, and videos every second.

DynamoDB's Role: It stores user profiles, posts, comments, likes, and activity feeds, handling massive data volumes and lightning-fast reads and writes to keep the app responsive and engaging.

Benefits: It can handle unpredictable surges in traffic without breaking a sweat, ensuring a smooth user experience even during peak hours.

Online Shopping

Imagine a popular e-commerce site: Thousands of products, customer reviews, order history, and real-time inventory updates.

DynamoDB's Role: It stores product catalogs, customer information, shopping carts, order details, and real-time inventory levels, ensuring accurate product listings and smooth checkout processes.

Benefits: It can handle high traffic during sales events and flash sales, preventing website crashes and frustrating timeouts.

Gaming Platforms

Envision a multiplayer game with millions of players: Game scores, player profiles, leaderboards, and real-time interactions.

DynamoDB's Role: It stores player scores, rankings, in-game items, and real-time match data, enabling seamless gameplay and fast-paced competition.

Benefits: It can handle global player bases and massive data updates without lag or delays, delivering a smooth and immersive gaming experience.

In summary, Amazon DynamoDB simplifies database management, provides high performance, and is well-suited for applications demanding scalability and low-latency access to data.

References

Getting started with DynamoDB Table

Global and Local Secondary Indexes

Create a Global Secondary Indexes

Restoring a DynamoDB table to a point in time

Conclusion

We've embarked on a journey through the dynamic world of DynamoDB, exploring its power to manage diverse data with flexibility and speed. In this exploration, we delved into its foundational components, from understanding primary key structures to the nuances of simple and composite keys, and later creating the table and items. The capability to leverage Global and Local Secondary Indexes (GSI & LSI)enhances query flexibility, while Point-in-Time Recovery (PITR) ensures data resilience through temporal snapshots, and the ability to create Global Tables (Replica) which helped in table replication into the target region, DynamoDB also provides powerful mechanisms for data export and real-time stream processing through Exports and Streams, and Additional settings in DynamoDB table.

So, whether you're crafting a mobile app reaching millions, building a real-time analytics platform, or powering a global e-commerce giant, DynamoDB stands ready to be your trusted data partner. Remember, the possibilities are limitless, and with DynamoDB at your side, your data is always ready to propel you forward.

Thank you so much for reading my blog! 😊 I hope you found it helpful and informative. If you did, please 👍 give it a like and 💌 subscribe to my newsletter for more of this type of content. 💌

I'm always looking for ways to improve my blog, so please feel free to leave me a comment or suggestion. 💬

Thanks again for your support!

Connect with me -

#aws #awscommunity #cloudcomputing #cloud